All right, you win. We give in. Yes, you’re interested in browser standards and security protocol and upgrades to scripting languages and all of that. You’re also interested in blowing up pigs with a group of cheesed...

The Official AryanIct.com Company

All right, you win. We give in. Yes, you’re interested in browser standards and security protocol and upgrades to scripting languages and all of that. You’re also interested in blowing up pigs with a group of cheesed...

The logo for Cooperative Linux, more popularly known as coLinux, sums up the attempted approach to the platform. Placing the Windows logo and Linux penguin in opposite ends of the yin yang summarizes not just this software, but the problem...

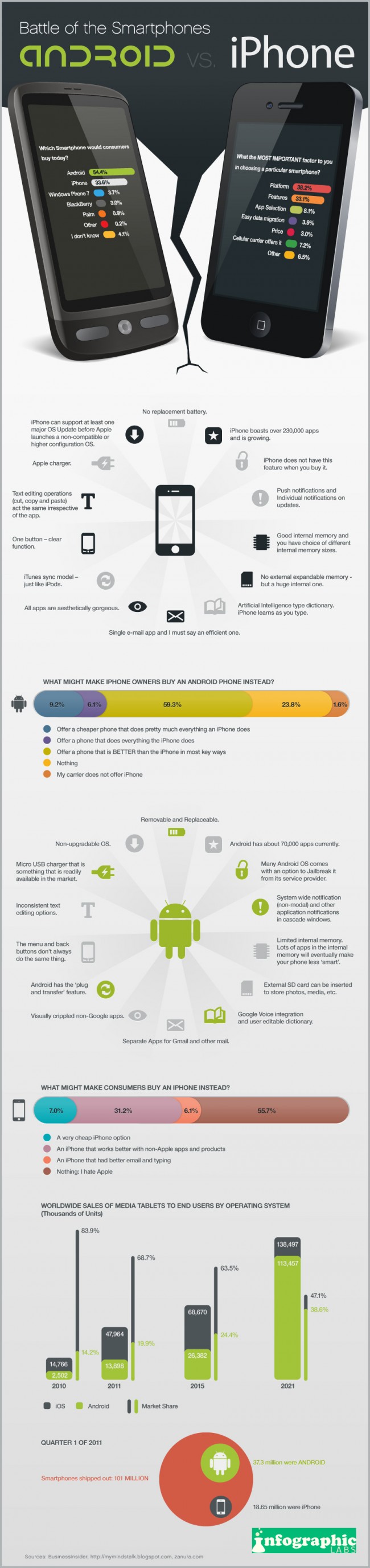

Android vs. iPhone The above infographic demonstrates head to head comparison between the two biggest rivals in the smartphone market at the moment. Take a look at the pros and cons of each smartphone along with the market success of each.

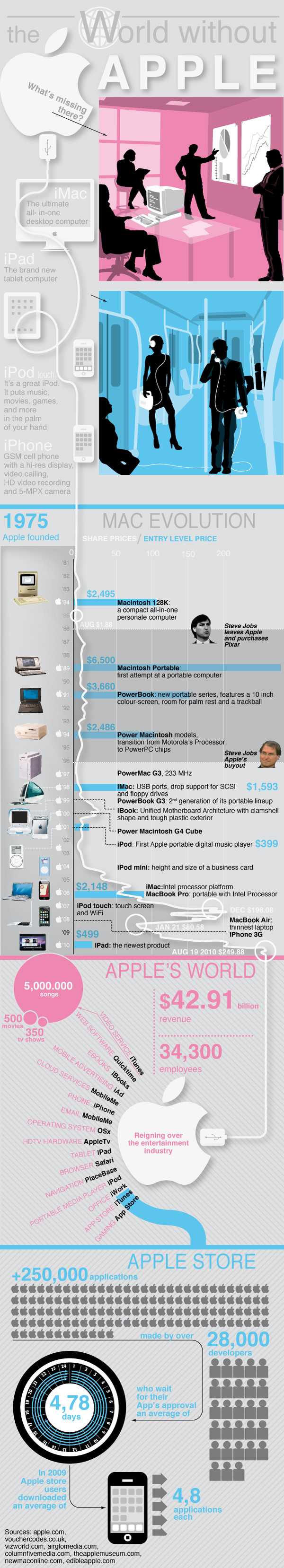

World Without Apple This infographic presents a timeline for the most important product launches in Apple’s history, keeping an eye on the stock value at the time of every product launch. Guys at Infographic Labs analyzed several data around...

In its short life, the internet has gone through a number of geological ages. Terms like “Net 2.0” have been coined to place markers on these transition points. Another one is starting to make its rounds, and unlike most o...

It’s hard not to look at the iPhone 4S through the lens of Jobs’ recent death. It was released the day before he left us, and is the latest version of one of Apple’s most revolutionary products under his helm. Whatever...

Cloud technology is evolving so quickly that it’s near impossible to keep up with all of its developments. We’re not even going to try ourselves. Let’s instead do a brief news summary of some of the biggest recent advanc...

For my next column I was all set to write about the iPhone 4S, which was released to a flurry of reactions that were so-so. It will make an interesting column either way once it is crafted. However, it was only a few hours before I began...

Facebook, Twitter, and probably soon Google+, get most of the analysis of the social networking world. With that comes most of the attention of advertisers. This is the billion-user World Wide Web, though. For every bar that all the...

Was it just a few years ago that BlackBerry got the kind of endorsement that marketers dream at night of? Then-candidate for President Barack Obama revealed that even he had fallen for the allure of the “Crackberry.” Estimates...

WordPress Usage Within Top 100K Internet Sites WordPress’s popularity is undeniable, from family bloggers to some of the world’s most trafficked websites. It powers a good part of the Internet’s public websites. A few months ago...

It’s amazing how fast the iPad has gone from just entering the marketplace to having a huge place in the market. Just a year and a half since its introduction, and we’re already well past iPad 2. Even Rocky didn’t ma...